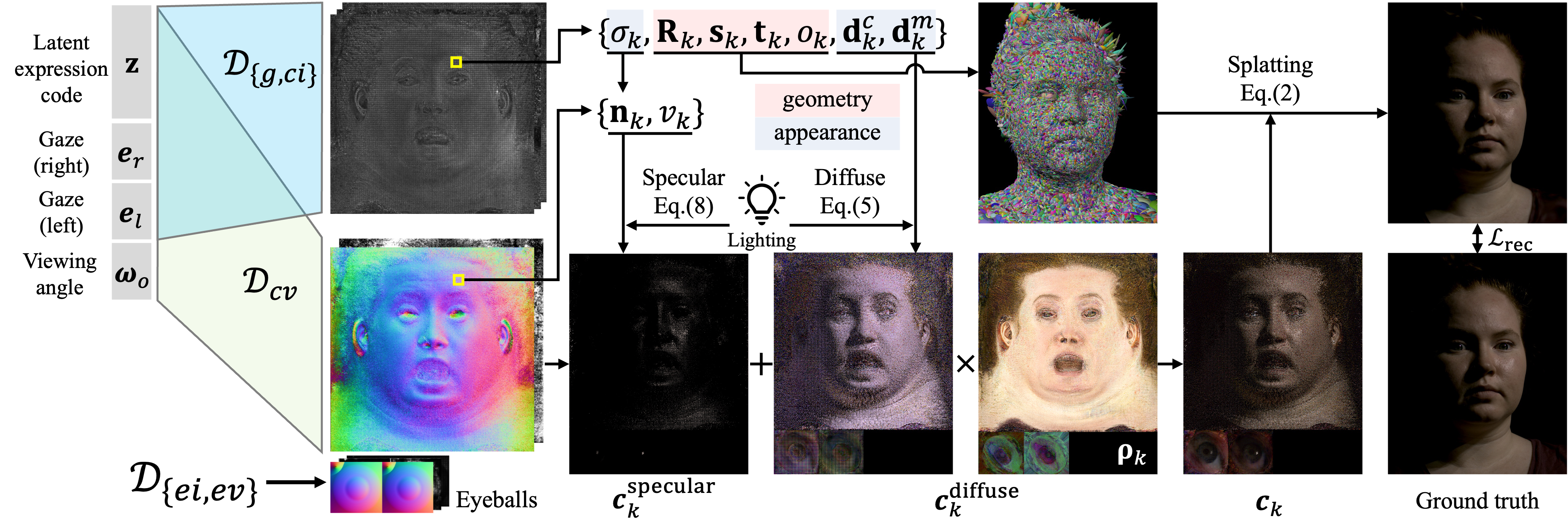

Method

Relightable Gaussian Codec Avatars are conditioned with a latent expression code, gaze information, and a target view direction. The underlying geometry is parameterized by 3D Gaussians and can be efficiently rendered with the Gaussian Splatting technique. To enable efficient relighting with various illuminations, we present a novel learnable radiance transfer based on diffuse spherical harmonics and specular spherical Gaussians. Please refer to the paper for more details.

Disentangled Controls

Our model enables the disentangled control of expression, gaze, view, and lighting as demonstrated below.

Gaze

View

Lighting

You can try our interactive viewer with individual control of view/expression/light here.

Application

Interactive Relighting and Rendering in VR

Relightable Gaussian Codec Avatars can be rendered in real-time from any viewpoints of a VR headset. We also demonstrate interactive point light control as well as relighting in natural illumination.

Video-driven Animation

Relightable Gaussian Codec Avatars can be driven live from video streaming from head mounted cameras (HMC).