| Name | Shunsuke Saito (齋藤 隼介) |

| Interest | Human digitization algorithms that work in practice |

| {firstname}.{lastname}16 (at) gmail.com |

I am a Research Scientist at Meta Codec Avatars Lab in Pittsburgh, where I lead the effort on next generation digital humans (foundational model, relighting, faces, hands, full-body, hair, clothing, accessories, and more!). For example, I am one of the core contributers of Codec Avatar 2.0, which was featured in Meta Connect 2022 and Lex Fridman's metaverse interview with MZ. If you are interested in an internship with us, feel free to email/DM me!

|

|

Relightable Full-body Gaussian Codec Avatars |

|

|

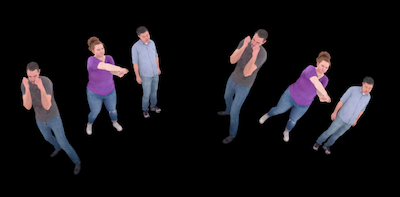

SqueezeMe: Mobile-Ready Distillation of Gaussian Full-Body Avatars |

|

|

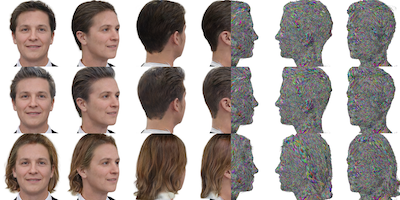

3DGH: 3D Head Generation with Composable Hair and Face |

|

|

Pippo: High-Resolution Multi-View Humans from a Single Image |

|

|

FRESA: Feedforward Reconstruction of Personalized Skinned Avatars from Few Images |

|

|

PGC: Photorealistic Simulation-Ready Garments from a Single Pose |

|

|

3DTopia-XL: Scaling High-quality 3D Asset Generation via Primitive Diffusion |

|

|

Vid2Avatar-Pro: Authentic Avatar from Videos in the Wild via Universal Prior |

|

|

REWIND: Real-Time Egocentric Whole-Body Motion Diffusion with Exemplar-Based Identity Conditioning |

|

|

Agent-to-Sim: Learning Interactive Behavior Models from Casual Longitudinal Videos |

|

|

D3GA: Drivable 3D Gaussian Avatars |

|

|

Codec Avatar Studio: Paired Human Captures for Complete, Driveable, and Generalizable Avatars |

|

|

URAvatar: Universal Relightable Gaussian Codec Avatars |

|

|

Manifold Sampling for Differentiable Uncertainty in Radiance Fields |

|

|

Sapiens: Foundation for Human Vision Models |

|

|

Bridging the Gap: Studio-like Avatar Creation from a Monocular Phone Capture |

|

|

Expressive Whole-Body 3D Gaussian Avatar |

|

|

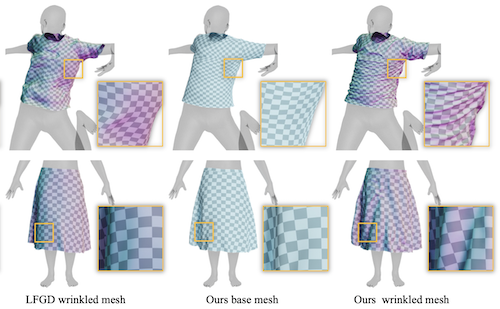

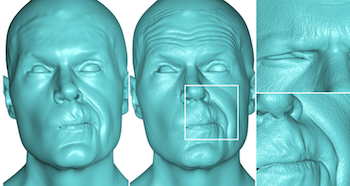

High-Fidelity Modeling of Generalizable Wrinkle Deformation |

|

|

Relightable Gaussian Codec

Avatars |

|

|

URHand: Universal

Relightable Hands |

|

|

GALA: Generating Animatable Layered

Assets

from a Single Scan |

|

|

InterHandGen: Two-Hand Interaction

Generation via Cascaded Reverse Diffusion |

|

|

Diffusion Shape

Prior for Wrinkle-Accurate Cloth Registration |

|

|

A Dataset of Relighted 3D

Interacting

Hands |

|

|

Diffusion Posterior

Illumination for

Ambiguity-aware

Inverse Rendering |

|

|

Single-Image 3D Human

Digitization with

Shape-guided Diffusion |

|

|

NCHO : Unsupervised Learning for Neural

3D Composition of Humans and Objects |

|

|

CT2Hair: High-Fidelity 3D Hair Modeling

using Computed Tomography |

|

|

RelightableHands: Efficient Neural

Relighting of Articulated Hand Models |

|

|

MEGANE: Morphable Eyeglass and

Avatar Network |

|

|

Dressing

Avatars: Deep Photorealistic Appearance for Physically Simulated

Clothing |

|

|

KeypointNeRF:

Generalizing Image-based Volumetric Avatars using Relative Spatial Encoding of

Keypoints |

|

|

AutoAvatar:

Autoregressive Neural Fields for Dynamic Avatar Modeling |

|

|

Neural Strands: Learning

Hair Geometry

and Appearance from Multi-View Images |

|

|

Authentic

Volumetric Avatars From a Phone Scan |

|

|

Drivable

Volumetric Avatars Using Texel-aligned Features |

|

|

COAP:

Compositional Articulated

Occupancy of People |

|

|

Neural Fields in

Visual Computing and Beyond |

|

|

ARCH++:

Animation-Ready Clothed Human Reconstruction Revisited |

|

|

Deep

Relightable

Appearance Models for Animatable Faces |

|

|

SCANimate:

Weakly Supervised Learning of Skinned Clothed Avatar Networks |

|

|

SCALE:

Learning to Model Clothed 3D Humans with a Surface Codec of Articulated Local

Elements |

|

|

Pixel-Aligned

Volumetric Avatars |

|

|

Monocular

Real-Time Volumetric Performance Capture |

|

|

PIFuHD:

Multi-Level Pixel-Aligned Implicit Function for High-Resolution 3D Human

Digitization |

|

|

Learning to Infer

Implicit Surfaces without 3D Supervision |

|

|

PIFu:

Pixel-Aligned Implicit Function for High-Resolution Clothed Human

Digitization |

|

|

SiCloPe:

Silhouette-Based Clothed People |

|

|

3D

Hair Synthesis Using Volumetric Variational Autoencoders |

|

|

paGAN:

Real-time Avatars Using Dynamic Textures |

|

|

High-Fidelity

Facial Reflectance and Geometry Inference From an Unconstrained Image |

|

|

Mesoscopic

Facial Geometry inference Using Deep Neural Networks |

|

|

Avatar

Digitization from a Single Image for Real-Time Rendering |

|

|

Quasi-Developable

Garment Transfer for Animals |

|

|

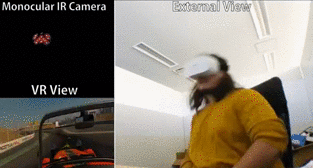

Outside-in

Monocular IR Camera based HMD Pose Estimation via Geometric

Optimization |

|

|

Learning

Dense Facial Correspondences in Unconstrained Images |

|

|

Realistic

Dynamic Facial Textures From a Single Image Using GANs |

|

|

Production-Level

Facial Performance Capture Using Deep Convolutional Neural Networks |

|

|

Photorealistic

Facial Texture Inference Using Deep Neural Networks |

|

|

Multi-View

Stereo on Consistent Face Topology |

|

|

High-Fidelity

Facial and Speech Animation for VR HMDs |

|

|

Real-Time Facial

Segmentation and Performance Capture from RGB Input |

|

|

Garment

Transfer for Quadruped Characters |

|

|

Computational

Bodybuilding: Anatomically-based Modeling of Human Bodies |

|

|

PatchMove: Patch-based Fast

Image Interpolation with Greedy Bidirectional Correspondence |

|

|

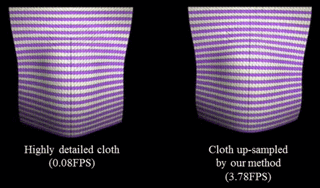

Macroscopic

and Microscopic Deformation Coupling in Up-sampled Cloth Simulation

|

|

|

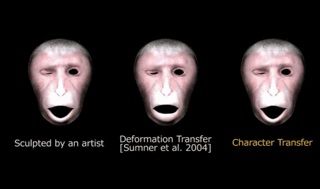

Character

Transfer:

Example-based individuality retargeting for facial animations |

|

|

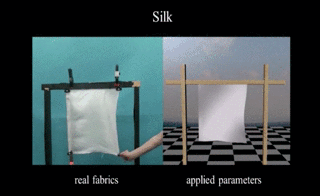

Estimating Cloth Simulation Parameter from 3D Cloth Motion

Separating Dynamic from Static Motion |

Shunsuke Saito

Web: www-scf.usc.edu/~saitos/

E-mail: shunsuke.saito16 (at) gmail.com

Technical Paper Reviewer:

ECCV, ICCV, IJCV, SIGGRAPH, CVPR, TPAMI, ToG, AAAI, WACV, 3DV, ACCV, TVCG, Signal Processing, SIGGRAPH ASIA, CGF, PG, Eurographics, etc.

Internship:

MPI Tübingen (2020), FAIR, Adobe Research (2019), Pinscreen (2018), FRL (2017), FOVE (2015), Yahoo! Japan (2012-13)

Talk:

CMU VASC Seminar, Brown VC Seminar, BIRS Workshop, Asiagraphics Webinar (2023), Dagstuhl Seminar (2022), MPI Saarbruecken/Tuebingen, Industrial Light & Magic, Dagstuhl Seminar (2019), University of Tokyo, CMU Graphics Group, VC Symposium (2018), VC/GCAD Symposium, Autodesk Research (2015)

Scholarship and Awards:

Outstanding Reviewer (ICCV2023, 3DV2020,

ACCV2020),

CVPR Best Paper Finalist (2019, 2021),

Super Creator, IPA Exploratory

Software (2015),

ACM Student Research Competition Semi-Finalist (2014),

MIRU Interactive Presentation Award, Okayama, Japan (2014),

UCLA

CSST Scholarship Program $9,036

(2013),

Okuma Memorial Special Scholarship $5,000 (2011)